Abstract

Pragmatic zero-knowledge proofs have remained an elusive promise in cryptography for nearly four decades, finding limited application despite a powerful guarantee of verification without disclosure. We propose that Large Language Models (LLMs) can serve as practical zero-knowledge verifiers due to an ostensible limitation: their stateless, memoryless architecture. This inherent amnesia during inference — where sensitive data is processed and immediately forgotten — provides a natural foundation for zero-knowledge systems. This approach eliminates the traditional tradeoff between verification efficacy and information security. As Zero-Knowledge Verifiers (ZKVs), LLMs can close trust gaps in cybersecurity, AI safety, and international agreements.

1. Introduction

The concept of zero-knowledge verification has captivated the cryptographic community since its introduction by Goldwasser, Micali, and Rackoff in 1985. Their work earned them the Turing Award and spawned decades of research, yet practical implementation has remained elusive.

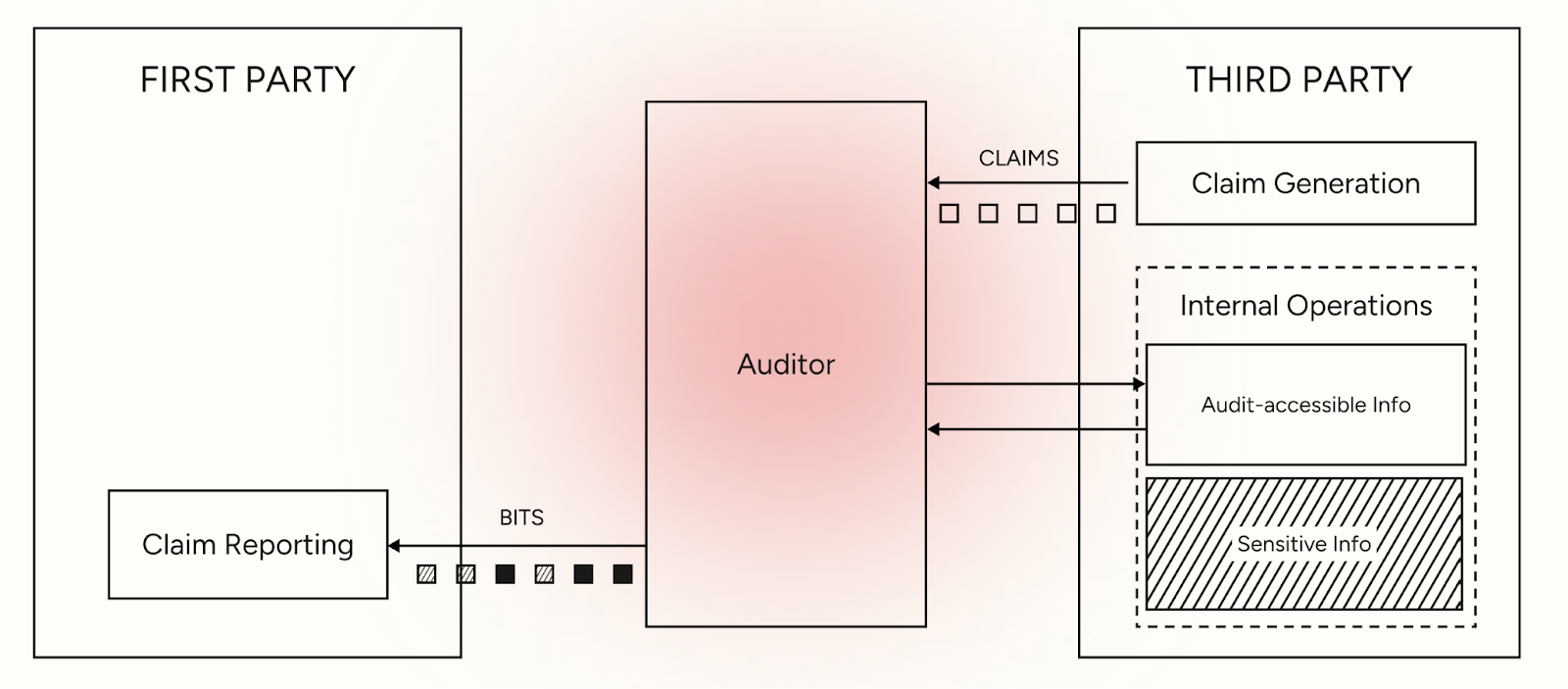

Goldwasser et al. demonstrated that one can prove specific claims such as "I know this password," "I possess sufficient funds," or "I computed this result correctly", while revealing nothing beyond the truth of the statement itself. This counterintuitive idea upends traditional verification, where proving a claim requires exposing the underlying evidence: a financial auditor must have access to all the books and selectively sample to produce an opinion ; a potential acquirer demands to review source code and other sensitive proprietary information when conducting diligence for a technology transaction. But what if you could demonstrably validate every financial transaction without revealing the details, or demonstrate innovative capacity without disclosing proprietary information? That is the unrealised promise of zero knowledge proofs.

2. The Promise and Problems of Zero-Knowledge Proofs

Wigderson et al. demonstrated that any problem in NP can be proven in zero knowledge, meaning that theoretically, we can create zero-knowledge proofs for virtually any verifiable statement. This includes complex assertions like "this software contains no backdoors," "this company complies with GDPR," or "this financial model accurately reflects risk."

If such capability were practicable, it would transform trust-based interactions across all industries. Think about the domain of supply chain security — an area of particular interest to the authors of this paper and critical importance to modern infrastructure. Software vendors could demonstrate their security posture without exposing defensive techniques like network architecture, access controls or their active vulnerabilities. Customers could verify the absence of backdoors without reviewing source code. Organizations could assess their entire supply chain's security and compliance without accessing sensitive supplier information. This would fundamentally shift the pareto frontier, enabling robust security guarantees in a highly automated way without the traditional tradeoff of exposing defensive techniques and proprietary approaches.

Despite their theoretical elegance, zero-knowledge proofs have achieved limited practical adoption beyond specialized applications. The core challenge lies in implementation: even simple zero-knowledge proofs require specialized cryptographic expertise and custom circuit construction. Scaling this to verify entire compliance frameworks, M&A agreements, or software supply chain standards — all defined in natural language rather than mathematical logic — remains beyond current practical reach.

3. LLMs as Natural Zero-Knowledge Verifiers

LLMs present a compelling solution to these limitations through a unique set of capabilities that recreate the benefits of zero-knowledge provers.

First, LLMs operate in the natural language used to define controls, agreements, and standards. Unlike traditional zero-knowledge proof systems that require mathematical formalization, LLMs can directly interpret requirements like "ensure all customer data is encrypted at rest" or "maintain audit logs for seven years." This eliminates the translation gap that has historically made zero-knowledge proofs difficult to adapt to arbitrary tasks.

Second, modern LLMs are capable of solving complex tasks across a wide range of domains. They can analyze documents, evaluate evidence, cross-reference requirements, and make sophisticated judgments about compliance and security. When fine-tuned on specific domains, their accuracy rivals human experts. Crucially, they can perform these computations across any domain, and can utilize tool calls in an agentic framework to explore their environment during inference.

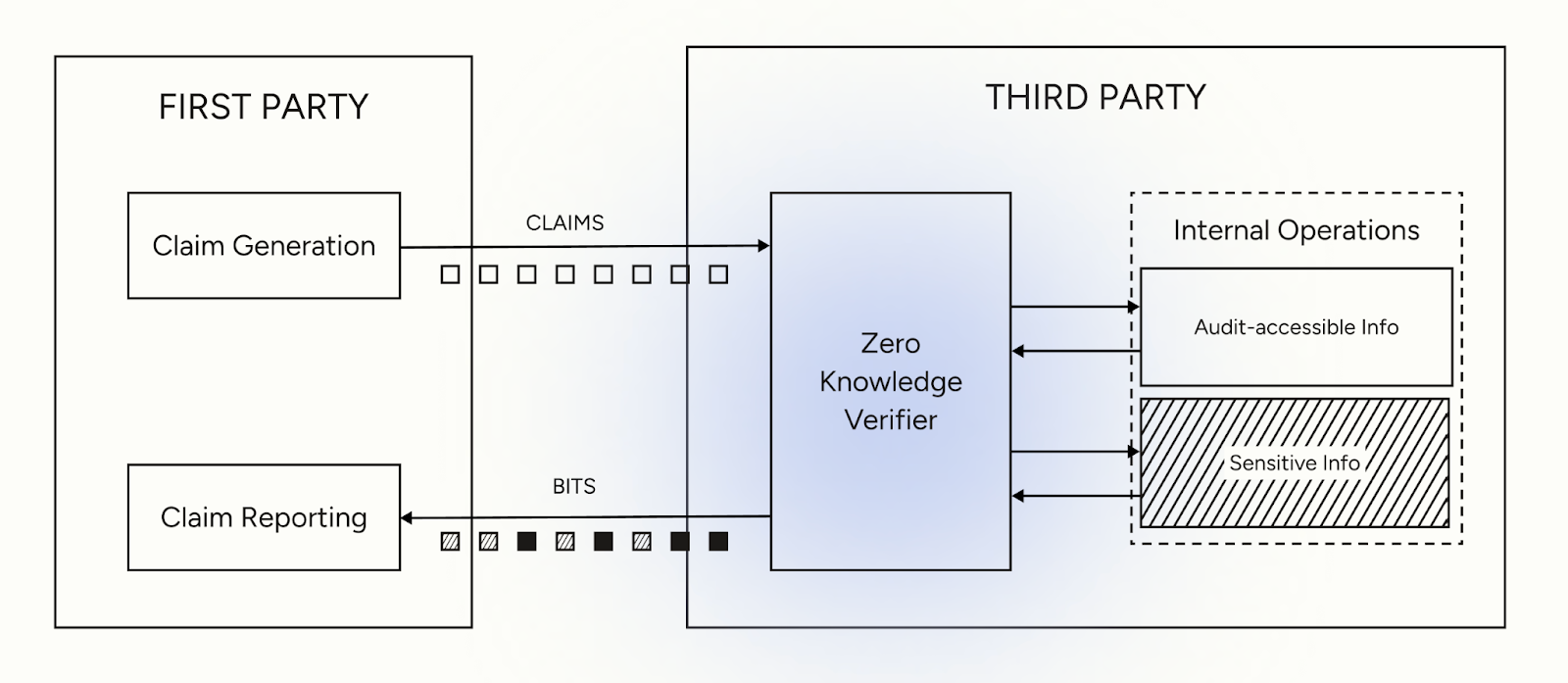

Third, LLMs are stateless operators over multi-session tasks. For our use case, this is perhaps the most critical aspect of LLM computation. Unlike human verifiers who retain memories, form impressions, and might inadvertently or deliberately share sensitive information, LLMs process information without persistent memory. Once computation completes, only the model output remains. The weights don't change, no gradients are stored, no hidden states persist. Each inference is truly isolated.

4. A New Tool for the Verification Toolkit

4.1 Transforming the Verification Landscape

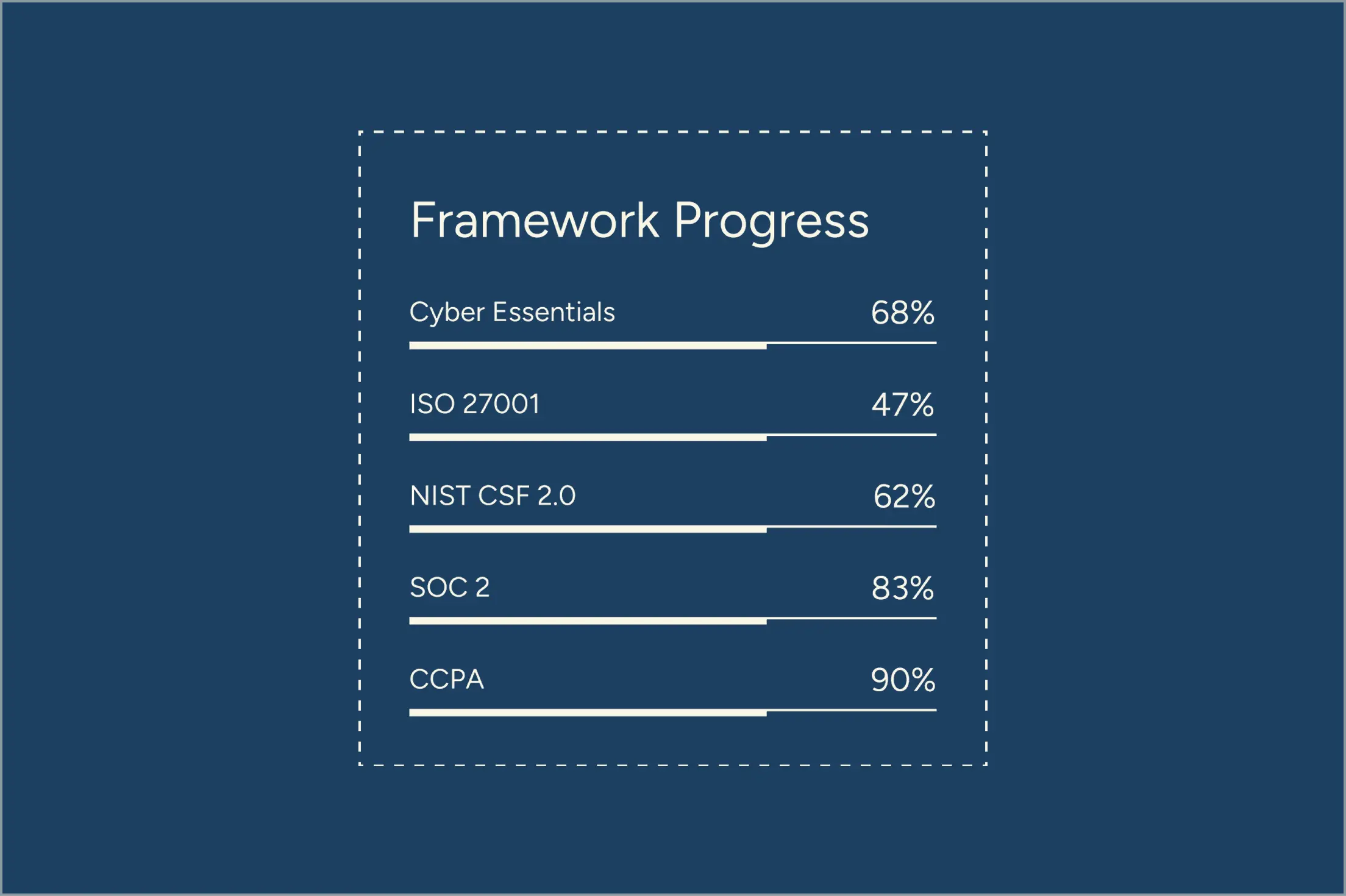

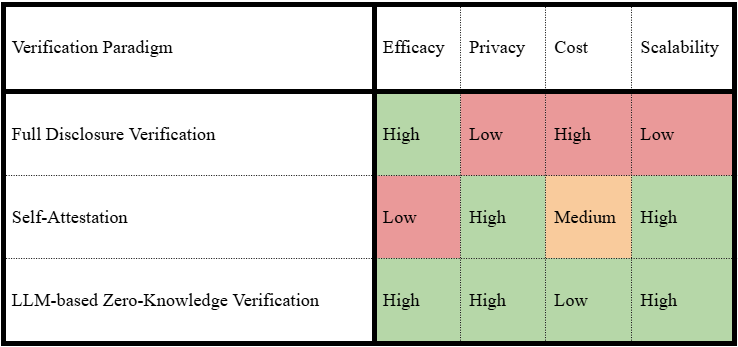

The verification landscape has long been constrained by fundamental tradeoffs, as shown in Table x.

Table x: Comparison of verification paradigms across key dimensions

Organizations currently choose between two unsatisfactory options. Full disclosure verification (traditional audits, M&A due diligence, expansive security assessments) provides thorough assurance but requires exposing sensitive systems and data while incurring significant time and cost. Self-attestation and questionnaires preserve privacy and scale efficiently but lack the credibility needed for genuine assurance.

LLM-based verification breaks this tradeoff. By processing natural language requirements through stateless inference, LLMs can rigorously verify claims while revealing only the verification result, not the underlying data. This enables verification that is simultaneously effective, private, and scalable.

4.2 The Imperative for Verifiable Claims in an Age of AI

The proliferation of powerful AI and the increasing complexity of our digital infrastructure create an urgent need for new verification paradigms. The traditional models of trust are no longer sufficient. LLM-based verifiers can address critical, emerging challenges where proof is necessary but transparency is impossible or dangerous.

- Supply Chain Security: Modern software companies like the major AI labs depend on complex vendor ecosystems where security verification traditionally requires exposing intellectual property and internal security measures — information that itself creates vulnerability. LLM verifiers allow vendors to prove security properties without revealing proprietary code or infrastructure details, enabling rigorous supply chain verification without the risks of disclosure.

- AI Safety and Alignment: As AI models become more autonomous, society needs mechanisms to verify safety claims without stifling innovation. Developers could use LLM verifiers to prove their models weren't trained on harmful datasets, incorporate safety principles, or resist specific misuse patterns — enabling meaningful oversight without exposing proprietary architectures.

- International Agreements: In a multipolar world, verification of treaty compliance often fails due to sovereignty concerns. LLM verifiers could serve as neutral arbiters, analyzing sensitive military or industrial data within national borders while outputting only compliance status. This enables credible verification of AI weapons treaties, climate commitments, or supply chain agreements without politically fraught inspections.

7. Challenges and Limitations

7.1 Technical Challenges

While promising, implementing LLM ZKVs presents significant technical hurdles.

First, the verifier must operate in a truly ephemeral environment where no information persists beyond the verification result. This requires not only secure processing environments but also strict output control (even minimal extraneous output could become a side channel for data leakage). Every bit returned must be necessary for the verification claim and nothing more.

A second major challenge lies in managing the verifier's potential for error either due to inaccuracy or adversarial manipulation. To address model inaccuracy, one could introduce mechanisms for uncertainty quantification. One simple approach would be to run diverse verifiers against the same evidence; the level of disagreement would provide a useful signal for accuracy. To avoid adversarial behaviour against the verifier one might introduce random "spot checks," where a human auditor reviews a small, randomly selected subset of evidence and the LLM's reasoning trace. While this technique leaks a small amount of information in expectation, it creates a significant game-theoretic disincentive for any party attempting to deceive the verifier, thereby increasing the overall integrity of the system.

7.2 Trust and Adoption Barriers

The human element presents challenges as significant as the technical ones. Regulatory acceptance stands as a critical hurdle — regulators must be convinced that LLM-based verification provides assurance equivalent to traditional manual techniques. This will require extensive validation and likely updates to existing regulatory frameworks. The question of liability adds another layer of complexity: when an LLM verifier makes an error, who bears responsibility? Answering this requires developing new legal frameworks and insurance models. Beyond regulatory concerns, cultural resistance may slow adoption. Organizations that have relied on human auditors for decades may struggle to trust AI systems with critical compliance decisions. Perhaps most challenging is the tension around explainability. Stakeholders naturally expect explanations for compliance decisions, yet zero-knowledge verification deliberately limits information disclosure. Resolving this tension requires carefully balancing transparency needs with the security and information protective benefits of this approach.

7.3 Game-Theoretic Long Term Stability

The integrity of an LLM-based verification system also depends on the strategic incentives of the parties involved. From a game-theoretic perspective, we must consider potential adversarial attacks. Overt attacks, such as providing falsified information or attempting to "jailbreak" the model, are inherently unstable long-term strategies. Discovery would lead to severe and permanent reputational damage, alongside potential legal and financial recourse. However, there could be more subtle strategies which undermine LLM ZKVs such as the obfuscation of information or the restriction of access. We believe that with careful mechanism design these too are possible to overcome.

8. Future Directions

The future likely involves hybrid verification models that combine LLM ZKVs with other complementary technologies as part of a broader trust toolkit. Blockchain attestation offers the ability to record verification results on immutable ledgers, providing tamper-proof audit trails that enhance the credibility of the verification process. Additionally, homomorphic encryption could allow computation on encrypted data, providing additional privacy guarantees that further strengthen the zero-knowledge properties of the system.

Industry-wide adoption requires comprehensive standardization efforts across multiple dimensions. Verification protocols need common standards for how LLM verifiers interact with verified parties, ensuring consistency and reliability across different implementations. Output formats require standardized schemas for verification results to ensure interoperability between different systems and stakeholders. Furthermore, certification frameworks must be established to create programs that certify LLM verifiers meet both privacy and accuracy requirements, building trust and confidence in the technology.

As the technology matures, emerging applications will expand the scope and utility of LLM ZKVs. Continuous compliance monitoring represents a significant advancement, enabling real-time verification that maintains zero-knowledge properties for ongoing regulatory adherence. Cross-border data verification could facilitate compliance verification across different jurisdictions without requiring actual data movement, addressing complex international regulatory requirements. Additionally, personal privacy verification could empower individuals to verify that their data is being handled appropriately without requiring access to corporate systems, democratizing privacy oversight and giving individuals greater control over their personal information.

9. Conclusion

LLMs represent an unexpected solution to a decades-old challenge in cryptography and verification. By combining natural language processing, universal computation capabilities, and stateless operation, they are able to provide the benefits of zero-knowledge verifiers whilst remaining practicable. LLM ZKVs are a viable pathway to transforming how we approach verification.

We have focused mainly on examples in cybersecurity, but the implications extend far beyond. Any scenario where verification requires accessing sensitive information could benefit from LLM-based zero-knowledge verification. From healthcare compliance to financial audits, from supply chain verification to intellectual property protection, the ability to verify without disclosure could reshape how organizations share and protect information.

The cryptographic community spent decades making zero-knowledge proofs practical, and the progress has been astonishing. But now, we have machines that understand the world as we describe it. In doing so, we've incidentally created zero-knowledge verifiers.

The golden apple has fallen from the tree.

References

Goldwasser, S., Micali, S., & Rackoff, C. (1985). The knowledge complexity of interactive proof systems. Proceedings of the 17th Annual ACM Symposium on Theory of Computing.

Wigderson, A., et al. (1991). Everything provable is provable in zero-knowledge. Advances in Cryptology—CRYPTO.

.svg)

.png)